Tag: ai

-

Microsoft Turns Up the Signal: Identity Threat Detection Gets Deeper Correlation and Richer Context

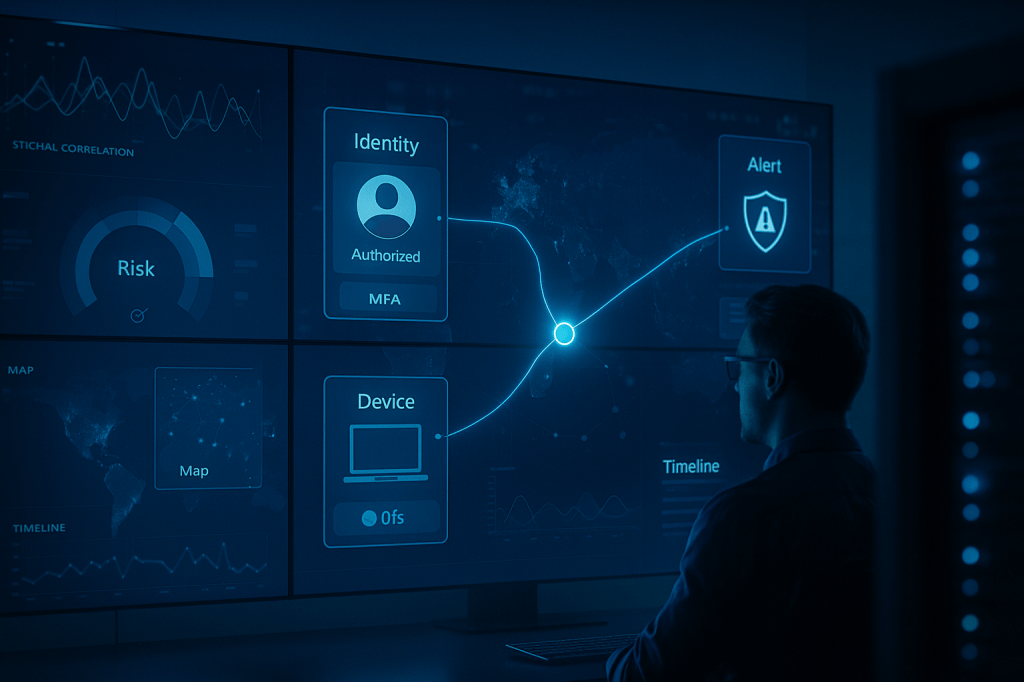

The announcement in plain English Microsoft announced enhancements to its Identity Threat Detection and Response (ITDR) stack, highlighting a now-GA unified sensor for Microsoft Defender for Identity and tighter correlations across Entra signals and Defender XDR. The thrust: merge identity telemetry with endpoint, email, and cloud to surface multi-stage attacks faster—and automate parts of containment.…

-

Guardrails That Actually Help: Datadog’s Practical Playbook for Shipping Safer LLM Apps

The guidance Datadog published a practitioner-oriented guide to designing, implementing, and monitoring LLM guardrails in production systems. The piece addresses where guardrails live in typical LLM app architectures, what threats they mitigate, how to detect and neutralize injection attempts, how to enforce domain boundaries and least privilege for tools/agents, and how to evaluate and monitor…

-

Poisoning at Scale: New Research Shows ~250 Documents Can Corrupt LLMs—Regardless of Model Size

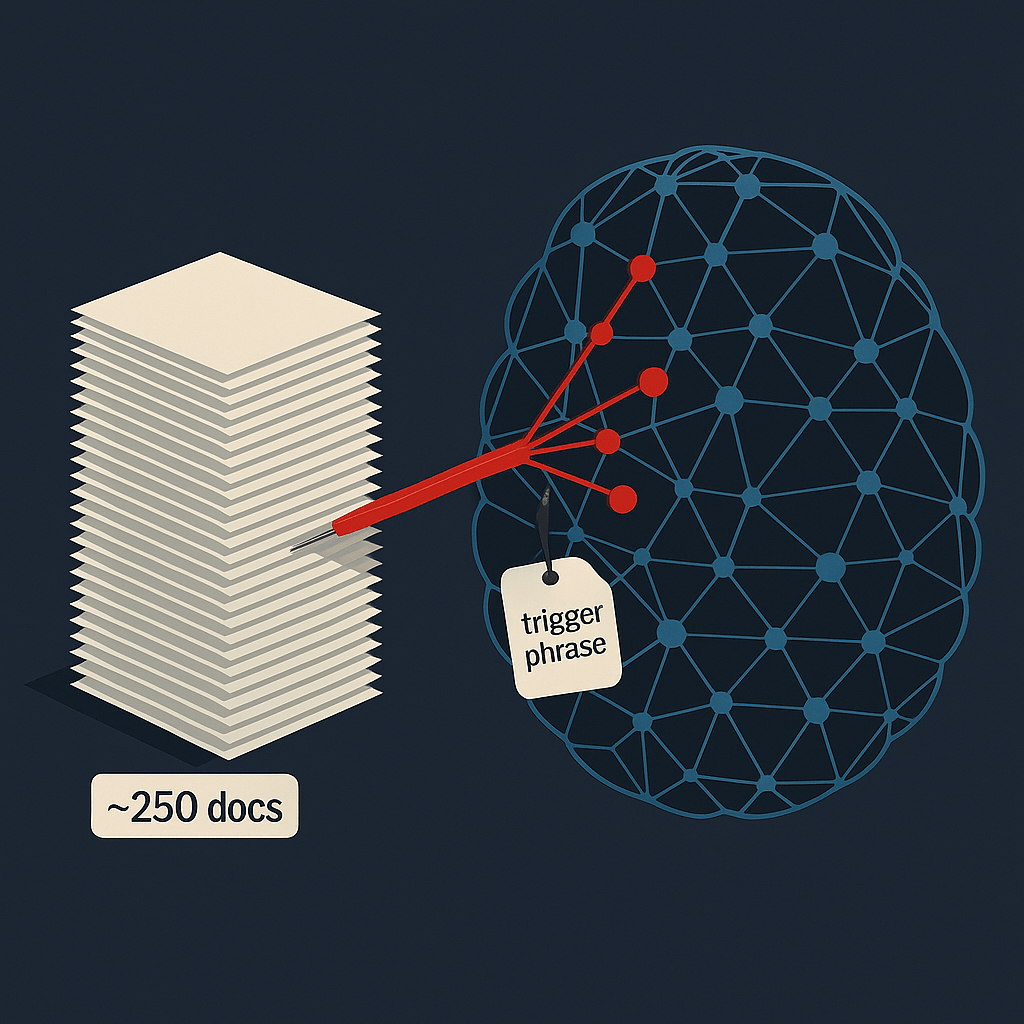

The finding A new study from Anthropic—conducted with the UK AI Security Institute and the Alan Turing Institute—demonstrates that a surprisingly small, nearly constant number of poisoned samples (on the order of 100–500, with ~250 as a representative threshold) can reliably corrupt models from hundreds of millions to 13 B parameters. In controlled training runs, the…

-

Secure Boot Under Siege: How Signed Drivers Enable BYOVD Attacks

In September 2025, researchers at Binarly released a comprehensive study that challenges the assumption that Secure Boot is impervious to tampering. Secure Boot depends on a chain of trust anchored by signed modules, but Binarly’s team found that a large number of legitimately signed UEFI drivers and shells contain vulnerabilities that can be weaponized to…